a short while back i read a post from someone talking about the sound quality of the renoise audio engine, and i didnt really take much notice from it, untill i began recording much more hardware synthesizers, and guitars, i would do a mix and everything would sound good, untill i bounced my audio down, and renoise would add a dulling sound to my mix, often resulting in my guitars not cutting through the mix, i thought this could have been phasing issues with my multi tack recording methods, but this wasnt the case, i recently got a hold of cubase 5 and used renoise in rewire mode, the sound quality using cubase’s sound engine is unbeleivable, a massive difference can be heard, just wondering if anyone else has noticed any of this ’ Dulling ’ characteristc ?

sigh

But on a more serious note: The usual cause for what you hear is either 1) your eyes, 2) your emotional attachment to the programme in question or 3) a difference in volume levels (especially since Renoise leaves 6dB, or whatever it is, headroom).

fladd

post up the tracks so we can compare them? (and don’t write what’s what of course)

6db in volume will not drastically alter upper mid range clarity if anything it would alter bass frequecny content, i will get some examples shortly, anyone on here record external instruments and ran into a similer problem ? i am not bashing renoise i have used it for 3 years and will continue to, i just find the audio engine to be dulling, so i have found a to get my sound across mixing in another program

a brighter skin ? what an odd thing to say, i assumed i would get response’s such as these, all programs have flaws knowing them allows music to made effectivly all equipment has flaws, i feel this a renoise’s

Please provide these samples as uncompressed .wav or .flac, and use the exact same audio source each time so we can do a direct comparison.

And just to clarify things… are you saying that Renoise sounds better to you when rewired through Cubase?

when a program is rewired, the master become the audio engine for the project so i used another program as the master, and renoise as the slave

I understand how Rewire works on the basic level, but I don’t use it myself to be honest. Could you tell me a bit more about how you have things set up? Do you just have the master output from Renoise sent into Cubase as a single stereo track (which is obviously being mixed by Renoise in that case), or do you have all your separate elements/tracks in Renoise broken down into individual channels which are being sent from Renoise and then mixed togehter within Cubase itself?

If you could provide more information, then it would help us try to get to the bottom of this.

So maybe renoise is not dulling but cubase is brightening?

(wasn’t meant to be a serious reply. Sry. continue.)

Thing is he said it sounds bright when playing on Renoise, only dull on the render. When playing Renoise suffers from aliasing, which will create some high frequencies which shouldn’t actually be there, and may be sometimes perceived as brightness in sound (although they are not always harmonic due to how aliasing works.)

Is there a Render mode that actually creates it as you hear when playing? I know there is Cubic and Arguru’s Sync but I don’t know if either method is used in normal playing, or more basic interpolation again. If there currently isn’t a render interpolation that is the same as the playback engine could be a good idea. Could differing also make a subtle difference?

Also, when doing Render Selection To Sample or any similar recordings (VST to Instrument etc etc) is there any way to set these preferences?

many programs are effected in the rendering stage, i have certificates in logic and pro tools, both sounding very different in the rendering stage, renoise only sounds dull to me in the rendering stage, i have high quality equipmrnt at hand, implamneting high quality dither so there should be no issue, to answer an erlier question i taking the output of every track in renoise, and doing a full mix else where, and i dont beleive cubase is ’ brightening’ the signal, when i press play in renoise it sounds good, when i bounce there is high frequecny issues, which result in audio quality loss, when i press play in cubase it sounds good, when i bounce it sounds the same, i used to make jungle and breakcore etc and never noticed it, it was only when my studio became more equiped and i started recording guitars, bass, synthesizers pianos etc, did i notice a fundimental and frustrating change within the audio engine

As far as I know, Cubic is used during realtime playback. I always use Cubic when rendering to .WAV, and I always get identical results to what I hear during live playback.

I’m not disputing your skills or the fact that you may be experiencing a problem here, so I apologise if you’re getting that impression. I was just trying to get a better understanding of your setup. So far you’ve said nothing about the rendering options you’ve used in Renoise, what your sample rate and bit depth is set to, whether you have soft clipping or dithering enabled, etc. Also, how do your render settings differ from your realtime playback settings, are you using different sample rates for each, etc.

I think we’ll just have to wait for you to provide some audio examples that demonstrate a noticeable problem or a clear difference in sound.

hmm, the standard setting is Arguru’s sync, and it says ‘perfect’. so i always figure i should use that, since it’s perfect. would it now turn out to be not so perfect?

No such thing as perfect when it comes to guessing!

I believe Arguru’s Sync smooths a little more than Cubic and I have heard people comment it sounds a little less sparkly and I know it has got rid of some interesting noise effects I’ve had with certain VSTs in the past (so may well be due to aliasing or similar artifacts…)

TWIIIIIIIIXXXXXX!!! lol

It is getting a little odd around here lately, huh? ![]()

I believe it defaults to Cubic. I recently installed Renoise to freshly formatted laptop and it defaulted to Cubic there.

Anyway, the term ‘perfect’ is a bit misleading. Sinc interpolation is commonly referred to as an ideal or ‘perfect’ method for reconstructing signals, but that does not mean that it will always produce a musically pleasing result. In certain very rare cases it can apparently lead to weirdness in very high frequency sounds, for example. I say apparently because I’ve never ran into it myself, but there have been some other posts here on the forum that demonstrate it, I believe. Anyway, most of the time it’s absolutely fine.

More info on sinc:

Cubic interpolation, although humbly labelled as ‘very good’ in Renoise, is actually pretty great and more than capable of handling whatever you throw at it. In my opinion, if you want your renders to sound exactly like your live playback with no surprises, then use Cubic.

The interpolation is only applied to sample-based instruments, not the output from VST plug-ins. There’s no need to interpolate or reconstruct the output from the VST, since it’s always outputting audio at exactly the correct sampling rate. It’s up to the VST itself to take care of its own interpolation internally.

Anyway… let’s not get too off-topic here. I don’t believe any of this is responsible for ‘dulling’ of sound. It’s very easy to do some frequency analysis and demonstrate that there is no observable weirdness going on, no obvious dips in the frequency response, etc. It’s also been proven that Renoise scores very well in tests for this type of thing - even better when rendering.

@dblue: thanks for the elaborate explanation. you might be right that it does not default to sync, i might have forgotten setting it, especially seeing how often (not very) i export songs from Renoise.

Any addition of signals will require interpolation, especially when going from 32bit float to 16 (or 24) bit render, as the calculated point of the summing will very often fall between the discrete points of 16/24bit recordings. Sure it doesn’t need to interpolate as much, IE whenever not playing at base note, as the original wave is changed internally, but this can not be the only place interpolation happens. Unless you are saying Renoise just uses Nearest Value for summing calculations. Although I belive this is a moot point, as if my memory serves me, it was an effect (filter I think) driven into pretty much overdrive that I noticed the difference with, not a synth.

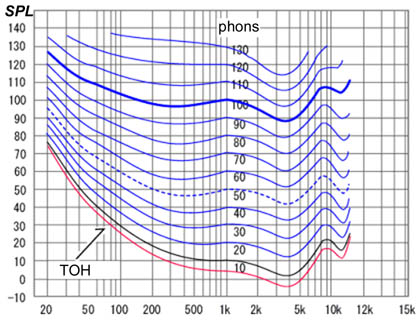

Also, with regards to Nathanlevi’s comment about it being the low frequencies that would be noticed most by drop in level, remember our threshold of hearing is a curve with both bass and treble needing more energy to be heard at the same level. This is why the classic Loudness button on stereos is a Smiley Face curve.

I do personally think a lot of the differences people think they hear is due to the -6dB render, although quite possibly not all.

i use 24bit 48Khz, and 16 bit dithering when rendering to .WAV i use sonnox dither and use no soft clipping, i have used both sync settings, i just beleive it is purely a problem with the sources i am recording as i am very precise on my mic choice, amp choice and pre amp setting etc, my point was mearly that i hear a noticable change when rendering and wondered if anyone here has noticed the same ? anyone here record guitars, strings, synths etc ?

Not to nit pick, or stray off topic, but what is the need for -6db renders? shouldn’t we be the one’s who decide what volume or renders come out at ?