You could post some audio or an xrns here and concrete strategies would likely follow lol

No shortage of opinions round these parts

You could post some audio or an xrns here and concrete strategies would likely follow lol

No shortage of opinions round these parts

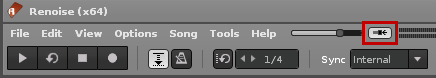

Yes, but there’s no way to avoid cutting the low ends in EVERY single track except the parts you want to keep bassy, and there’s also no way to avoid using compressors if you want to get rid of peaks, same in case of limiting in your master track. And you need to get rid of peaks if you want to achieve a constant volume through whatever system. You have to do it while mixing, there’s no way to fix mixing issues afterwards with an external program. That’s “proper mixing” and that’s the only method I can think of when it comes to a consistent volume on any system. Anyway, you’ll find out either way. ![]()

If you would humor me, did you do all your Bandcamp work in Renoise? Can you discuss your mastering workflow briefly?

I’m not looking to fix mixing issues, yet. I’m trying to diagnose the problem first with software.

I think the problem is I’m not driving the EQ high enough in the master track.

I did, and sure, but not rn cause I gotta bounce for work ![]()

@pictureposted

I’ve got the impression you don’t understand what was written here. ![]()

You don’t even need an EQ in your master track, especially not to gain loudness…

All right, good luck then. ![]()

I don’t use this but maybe have a look at Audiolens from Izotope. (oh it’s not for Linux sry)

“Meet the new Audiolens desktop app which makes track referencing and comparison easy by analyzing audio from any streaming platform or audio source. Build your personal library of reference tracks that you can access anytime to compare against your own mixes or masters.”

Upload some audio or an xrns and the diagnoses will flow, I guarantee it! ![]()

At first I thought Audiolens was kind of stupid. But after playing with it for a bit, and loading the measurements into Ozone 10, I think it could potentially be a useful tool indeed. Thanks for mentioning it.

Hello. If you don’t want to use automatic/AI solutions, there is no other way round than properly learning to mix or master yourself, or to find somebody who does it for you.

So…like zero budget, you still can do. It seems you already have Renoise, you can mix and master with it. If you want to go fancy, you can render with renoise and mix and/or master in another free software like Ardour. You can mix and master in renoise, too, though. And you of course need something fair to listen to your work with, that is suitable for the work. So either good speakers in a treated room, or you go the way of using proper headphones. I do headphones, and the benefit is you can work anywhere with it. But it is more difficult to learn how it translates to speakers, it will take more time to get used to. You need headphones or speakers that give back the sound in linear fashion, and all details, having good resolution and depth.

You need only little other tools for it. Maybe I can describe how I do… I track the instruments, and the mix is about shaping each track so it has the proper depth and timbre and is working well together with the other parts. This is a tricky part, and it takes a lot of expertise and experience. Basically I look for the main elements, which frequency ranges are defining, and lower them from other tracks so that they stand out in a way that doesn’t interfere with each other any longer. Some instruments will always be in the background or melt with others, while you can make sure that others like vocals have their most defining frequency ranges unspoiled. You can let rhythmic elements go into the freqs of voice or melodic in part, but melodic sounds eat each other if they clash. I try to have an ear for each sound, and listen which parts stick out or are too much or ring in a bad way or are mushy…then I cut them out with an eq just so ever slightly that they become neutral or won’t overshadow the other instruments. Then comes another EQ step that makes the balance of the sound, i.e. cutting out the bass or smoothing the highs and emphasizing certain mid or high freq parts, or making little peaks in different places that make the bass instruments have their own place in the mix to push through. It is good to listen to each part on it’s own, if it still sounds natural, then together with others. It is easy to mess up a sound by cutting too much or by making it sound too aggressive with just slightly too high boosts with the EQ. Then you can add reverbs/delays to make more room in the mix. A third important step is dynamics, that is shaping the sounds attack curves and pumping with compressors. This all you have to work out in dedicated in steps (or in a loop if you still practice) until everything works perfectly together. In the beginning this can take a long time, but with practice and experience you can get your recipes that work for your style. Don’t forget to also watch a lot of tutorials and other people’s recipes on how to do it for different styles.

In the end, ideally, the whole mix has a frequency curve similar to pink noise, where there is busy action in the foreground. Of course it can also have bumps or notches cut out from the ideal line, where the material sounding has this emphasis. The ultra busy mix should make a smooth line in the spectrum. You can use the spectrum analyzer in Renoise for this. I recommend setting the block size of the frequency graph to a high value, and then you can set the slope of the graph to +4.8 db/oct to get a response like pink noise. If you hit the right spectrum now, you will have a flat horizontal line (with little jaggies in it of course, but no wavering) in the frequency graph. You can set the peak fall to “still”, play back some sound, and then you can see the peak envelope of a passage of music.

The pink noise to listen to, you can just put a track with a sampled looped noise with autoseek enabled into your mix, and then you can enable it and level it in with the mixer. It can be helpful for beginners, to mix the individual sounds agains the noise so they just about stand out clearly against the noise. Then the noise is removed and the parts are added together, and this should have each track be gained in a good way against each other.

For the mastering, you could take a render, and use again ai solution or do it yourself with renoise. I do it without plugins, well the sound is not too pristine with the renoise effects, but you can also use some plugins, there are also free open source ones for linux. You need eq, compressor and a good limiter, saturation and bass enhancement or exciters, maybe multiband compression (you can use multiband send in renoise to get multiband compression), some subtle room from a vst or convolver. Like I said you can also do it with renoise which can be okay for noisy projects, just don’t expect top notch sound from the effects. I also use a metering plugin to get the right loudness for the result, I just use the free Distroho LUFS-Meter plugin, it works. It is the only functionality that renoise really lacks that you need for a good result. Sometimes, especially for coarse sounding styles, it can be okay to not use the multiband magic, but just give it a last shaping eq, compress and limit the sound to the desired loudness, and you are ready to go if the mix was already good enough for it to work.

So this is a lot of things to learn, it can be done, just not within some days or weeks. You need to train your ears with the gear you have, to listen for levels, for neutral sound and harsh or dulled sounds, for the attacks, body and tails of the sounds and how well they blend together. It’s a big art and how deep you want to go depends on how delicate or coarse the material you work with is. My tip is, if you try it yourself, don’t try to overdo it and push too much into each other. Instead try to leave the sounds natural and only cut slightly what overshadows or makes the sound muddy. Once you have learned to produce a completely neutral sound on your production gear, it can translate to each system, and the shape you manage to give it with ideal gear, can transport to (hopefully) many other systems. While learning, you need options to listen to the mix on different gear, so you know what to expect and what the limitations are for each, especially for the bass. I like to have different filters on the master, which can cut out bass like above 330 hz, make a shallow band pass around 1k that will give a focus on the important parts of the mix, make mono etc. so I can compare the mix how it sounds with this reduced detail, toggeling on and off. Sometimes it can work well to drastically reshape sounds, though, and for electronic music most people for example reserve the bass frequencies for only few bass heavy instruments, cutting the bass from all other sounds.

I got it for free, but it only works combined with Ozone or Neutron, right? So it’s pretty useless for me…

@pictureposted

That’s why you need to compress and limit to achieve your goal of a consistent volume:

Thanks for this long post. Very helpful. I have been learning/reading about mixing/mastering since I bought Renoise in 2022.

I am attempting simple experiments with a single track and testing on a variety of platforms. I suppose the weakness here in my set-up is I am only using headphones and not speakers and the speakers in my car or phone deliver different results than headphones on the production computer or laptop or phone w/headphones.

Meaning the discrepancy is between headphones and speakers and sound-level output. The flaws in the sound seem more apparent on speakers rather than headphones.