██╗ ██╗███████╗████████╗██████╗ ███████╗ █████╗ ███╗ ███╗

╚██╗██╔╝██╔════╝╚══██╔══╝██╔══██╗██╔════╝██╔══██╗████╗ ████║

╚███╔╝ ███████╗ ██║ ██████╔╝█████╗ ███████║██╔████╔██║

██╔██╗ ╚════██║ ██║ ██╔══██╗██╔══╝ ██╔══██║██║╚██╔╝██║

██╔╝ ██╗███████║ ██║ ██║ ██║███████╗██║ ██║██║ ╚═╝ ██║

╚═╝ ╚═╝╚══════╝ ╚═╝ ╚═╝ ╚═╝╚══════╝╚═╝ ╚═╝╚═╝ ╚═╝ v1.55

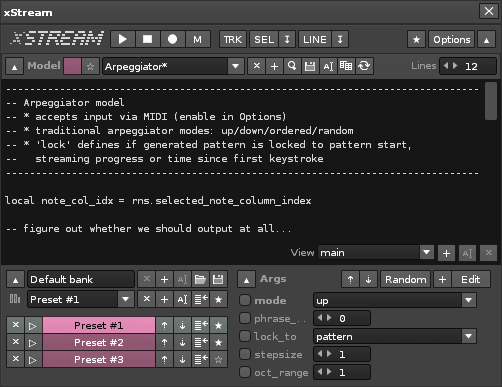

xStream is back!

Since the last version, which I released almost a year ago, I’ve used this tool - but not as much as I’ve wanted to.

Too often, the party got spoiled by a number of annoying, recurring issues - all of which should now be gone with this release (well, hopefully).

When it comes to this, I’d like to thank to pat for reporting everything he came across in just a couple of days of xStreaming

Also, there was some much-needed internal reorganization that needed to happen. Joule and others has made it clear how multi-track operation is the future for this tool.

And so, considering how much extra work this would involve - also resulted in xStream development being put on the back burner for a while.

But now, the tool is back, and I dare say, better than ever. It’s pretty much the same featureset as the previous release, but with a more solid foundation.

Download from the tool page

http://www.renoise.com/tools/xstream

As the featureset has stabilized since that “sprint” of last year, I’ve also had time to update the documentation.

It’s now spread across topics, with a better introduction to xStream coding than before, and generally just better organized.

You can check out the new documentation here (on github). Some of the pictures are a little outdated, I’m working on fixing that.

https://github.com/renoise/xrnx/tree/master/Tools/com.renoise.xStream.xrnx

Changelog for this release:

- Core: refactored several internal classes

- Core: more solid, simpler streaming implementation

- Fixed: loading favorites.xml was broken

- Fixed: selecting [no argument] would throw an error

- Fixed: table constants (e.g. EMPTY_XLINE) are now returned as a copy

- Fixed: read-only value arguments are not MIDI assignable

- Fixed: error when trying to create argument with just one "item"

- Fixed: failure to export presets when arguments are tabbed

- Fixed: setting custom userdata folder is now applied immediately

- Added: ability to migrate userdata to a custom folder

- Added: xLFO class + demonstration model

- Added: RandomScale model

- Added: Updated documentation