Hi!

I’ve noticed parameter automation in Renoise is not nearly as smooth as in other DAWs (tested Reaper, Bitwig and FLStudio).

Scenario is the following:

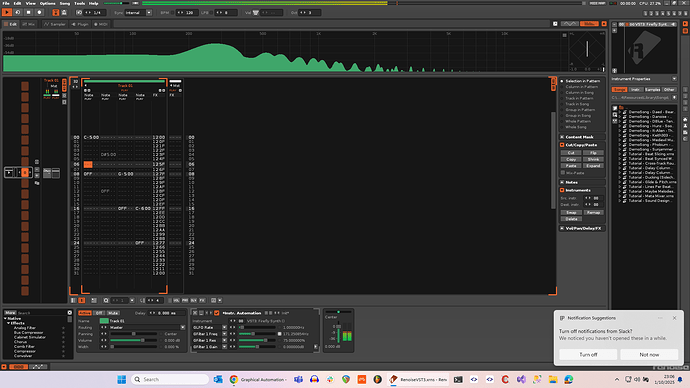

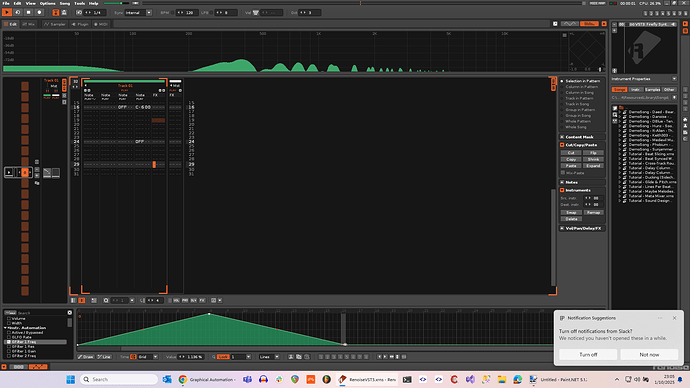

Load Surge XT into Renoise, create a single pattern of length 32, BPM 120, LPB 8, play a single C4 note from the start (Surge defaults to saw osci). Add an instrument automation control that varies Surge’s filter cutoff from 0 to max in those 32 lines. So +8 per line. The resulting changes in filter cutoff are audibly stepped. I am running at 48khz with the default 12-ticks-per-line, but varying any of those 2 doesnt seem to help much.

Is this expected behaviour? And if so, why? Reaper and Bitwig, on a similar simple demo, perform filter cutoff modulation absolutely smooth. Sorry if i’m missing something.

So really how often are automated vst3 parameter updates supposed to be sent to the plugin processor?

For some in-depth details: I’m developing my own VST3 plugin (just used Surge as an example because anyone can download it) and these are the results I’m getting when I am debugging automation events in my own synth:

- Reaper with 160 block size, 48kHz gives me 1 update per block, so 300 per second

- Renoise with 480 block size, 48kHz gives me 1 update each 8 (!) blocks, so only 12.5 per second

- Renoise with 128 block size, 48kHz gives me 1 update each 20 (!) blocks, so only 18.75 per second

TBH i have not debugged Bitwig nor Fruity but they do sound smooth, so I am assuming loads of parameter updates in those, too.

Now I know all of this can in theory be solved with internal parameter update filtering in the plugin (actually tried that, sounds smooth with an 150ms or-so low-pass), but that’s an additional 150 ms of latency. Also surely it cannot be the case that a somewhat mainstream synth like Surge behaves so radically different in Renoise vs any other DAW just with default settings?

Any insights and/or clarification greatly appreciated. Or even better please just tell me that i’m wrong and i messed up somewhere:)

Still loving Renoise so thanks once more for a wonderful product.