I am going to expand here because I am passionate about this topic…

From a native Renoise instrument you can generate sound in several ways:

- With the native sampler, using an audio wave (what appears in the waveform). For this to work, a sample slot must contain a buffer with data (with its number of channels, sample rate and bit depth) (with modulation, instrument fx and track dsp). From a nute you can trigger several audio waves (layers in keyzones).

- With a single instrument VST plugin (no modulation or instrument fx).

- With MIDI sound from the MIDI OUT (no modulation, no instrument fx, no track dsp; notes sound but sound is not sent to any track).

- Or any combination of the 3 previous options.

Basically, any of these options are the sound basis for triggering it via notes.

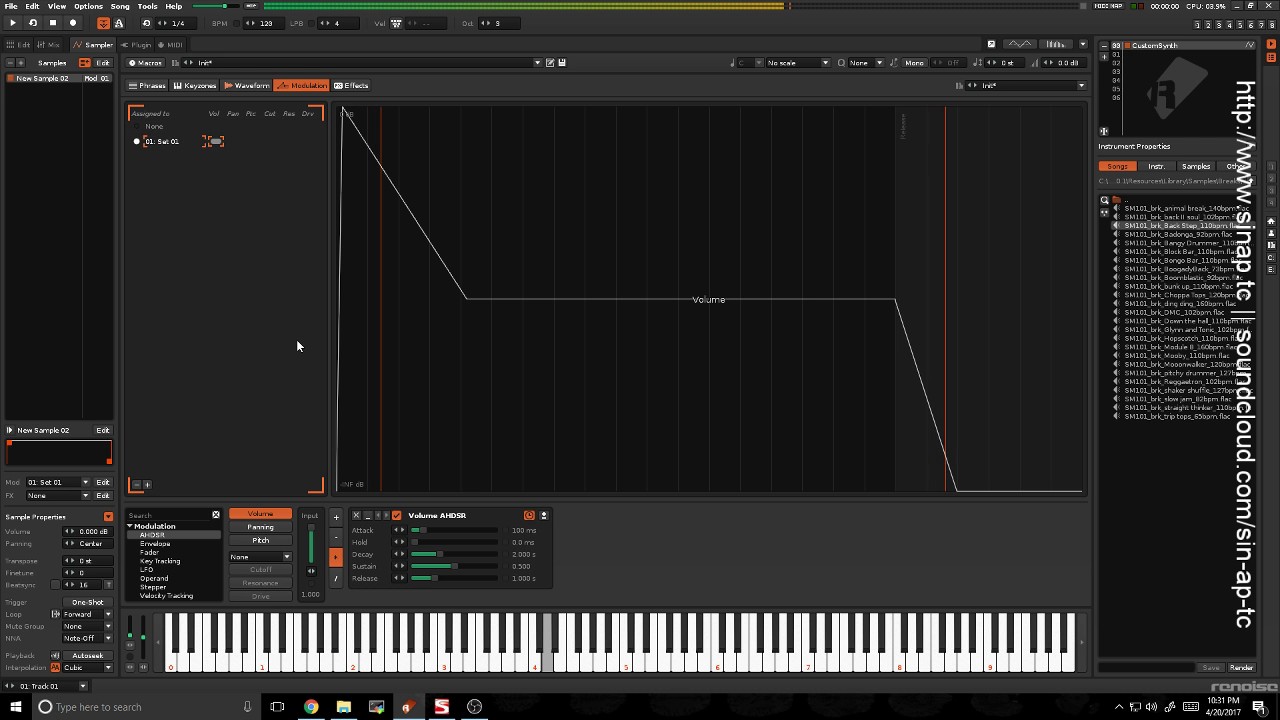

Starting from a native audio wave, a sample from the sample box (from the waveform), you can modulate and send to instrument fx in a later process. The result will be played on the audio tracks that have notes associated with the instrument, then playing the dsp effects of the track. The track output (including track sends) eventually goes to the master track.

A simple diagram from an audio wave (base sound, the waveform) on a native instrument (with 6 positions):

1- base sound → 2- modulation ins → 3- fx ins → 4- dsp track → 5- dsp master track → 6- render song.

So, from the base of a native sample (position 1), with its data buffer, it is possible to manipulate the data in the buffer. If we do not manipulate the number of frames in the sample, it is possible to manipulate the amplitude of all samples “practically in real time” if the number of frames is not very large…

The buffer is made up of consecutive frames, “digital data containers”. One way to explain it is that data such as amplitude and position in time for each channel are stored within each frame. It is this sample that can be manipulated, specifically its amplitude from 0 to 1, practically in real time, as long as the audio wave does not contain a very high number of frames (this allows the buffer not to be destroyed, because you do not change the number of frames). frames. Rebuilding the buffer may take too long). If you iterate between all the frames with some specific function, you can change the sound of the base of the audio wave, since you are manipulating the amplitude of its samples (that data that is within each frame).

Then, you can change the sound by manipulating the data in the buffer (without destroying this buffer) specifically the amplitude of each sample, using iteration functions through a Lua tool, whether you run it through a button, or if you create a tool that detects the trigger and stop of each note, and during that time transform the amplitude of all the samples of the audio waveform in some way.

Aside from manipulating the base sound of the audio wave, you can apply modulation and instrument fx.

So in summary, in Renoise there are only 2 things:

- the audio waveform (waveform), which is the basis of the sound, which you can manipulate (a consequence of frames), which is equivalent to granular digital synthesis,

- and also, add modulation and effects on top of that sound base.

If you rebuild the buffer, you can work with subtractive digital synthesis, to generate audio waves that are mathematically “easy” to create, such as a sine wave, sawtooth, square, etc. Or you can even employ addictive digital synthesis techniques, which involves generating different audio waves and mixing them as a result.

As an example of a Lua digital sound synthesis tool, you have the MIDI Universal Controller or MUC tool. It has a section called “Sampler Waveform”, which gives access to a sub-tool called “Wave Builder”, which based on an audio wave buffer, works with granular synthesis (basically manipulation of the amplitude of the samples in each frame), subtractive synthesis (with up to 8 wavetables) and addictive synthesis, mixing these wavetables.

But, for a “real-time” tool, it would only involve manipulating the amplitude of all the samples, without touching the number of frames in the buffer, and as long as said buffer does not contain a large number of frames (because you have to iterate over the entire range to change the amplitude value, multiplied by 2 if you use 2 channels, left and right).

![VCV Rack Ambient Patch [ Surge XT Modules released!! ]](https://forum.renoise.com/uploads/default/original/3X/4/a/4adf7c9b2466d42ed4c58879d1894de3ae24f4d9.jpeg)