I’m completely stuck in my workflow due to the incapacity to insert audio tracks into renoise.

With an audio track, I mean a recording that start&stops at any postition and is not triggered like a note.

I use a monophonic (analog) synth and I want to sequence and tweak the knobs track by track. This can only be done by overdubbing techniques, as it is impossible to let the synth play and create a 2nd track.

(It is impossible anyway to properly steer multiple tracks at the same time, being one single human being.)

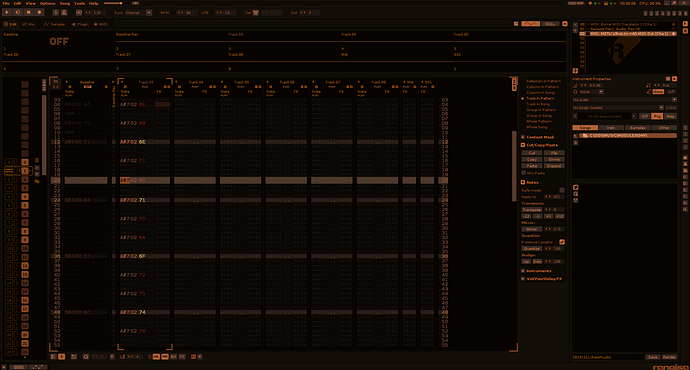

This is a basic feature of all the other DAW’s… but… I am a tracker. Totally melted together with hexadecimal numbers, patterns and … well I probably don’t need to explain why I use Renoise here… Could write a book about it.

I don’t want horizontal layouts, piano rolls and all that stuff. Not for composing and sequencing.

I want to work in Renoise as it is.

But I also want to capture my live output, put it in a track in renoise, and sequence/compose further based on that recording. So I can tweak my knobs (for instance on my favourite monophonic synth, which has no ability to save patches, that’s for control freaks, not for me)

Basically the main difference would simply be that instead of a sound recording that needs to be triggered, it is played at whatever point in the project you are working.

To make it workable, the following is needed:

-Most important of all → it ALWAYS plays without trigger, also in the middle of the audio recording. (technically if only this would be possible, even if the audio is loaded in an instrument, the workflow would already be possible, all other stuff is just “luxury”)

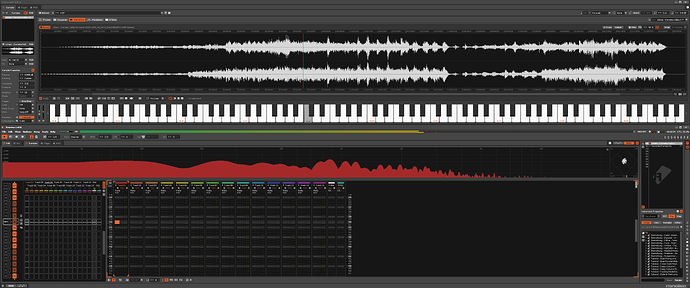

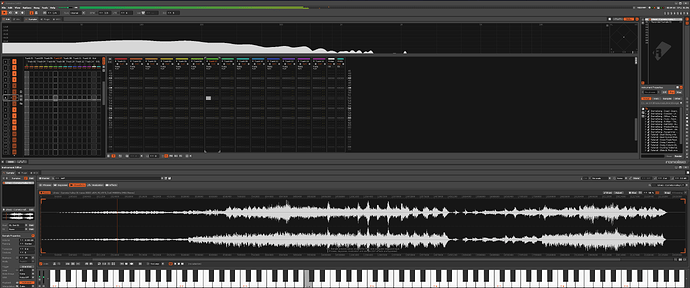

-Show the waveform (vertically of course!!) instead of notes. (One single note in the beginning isn’t very helpful, as a recording may easily last multiple minutes)

-It is best implemented an independent, synced timeline (inserting patterns, adjusting length etc should not have any influence on the continuously running recording.)

-It would probably best be inserted between the Pattern Sequencer and the Pattern Editor (and be collapsable, only visible when one wishes so)

-Of course it can have FX attached (would not use this a lot personally, but that’s purely a personal choice

It would make life much more easy, and just run Renoise completely alone to do everything.

Of course, just some checkbox, marking a certain recording/instrument as an “Audio recording” which should play at whenever position the sequence is started (not when it is triggered as note), would already do the job and can probably be implemented in a rather short timespan. It would lack the visual luxury, but after all, as long as we can hear what we do, it’s workable.

Now I’m really stuck…

Renoise can’t handle an audio track properly (yet intents to be a full featured DAW)

The other DAW’s… yea, they still resemble Cubase on the Atari. They offer nice ways to record audio, but anything related to sequencing and sculpturing a track to the detail… pfff, not my workflow.

I really hope this gets picked up, like my previous proposition about 20 years back.

(That was lifting the oldskool limitation of the amount of patterns possible, as I was stuck back then too. Couldn’t finish my track because I ran at 256 patters, and that was it… Most were joking about it, saying what I could do to work around it, but I was happy when the developers just realised that limitations based on 8-bit numbers were nothing more than a legacy from the past.)

Audio tracks is in my opinion the last thing that Renoise is lacking. It is essentially a basic feature of any DAW today. The combo of recordings and sequences are a heaven.

(Or a nightmare if you mess things up, but hey… great possibilities always demand some learning, every Renoise adept knows that!)