A lot of interesting discussion here.

I’ve been thinking about the mono compatibility issue for a few years and reached my own conclusions:

While mono checking can be really useful for checking your overall balances and picking up any potential phase issues, I couldn’t give a damn about mono compatibility, at all.

If someone actually listens to music in mono, that’s their loss, but I’m not going to start making my music mono compatible just because mono bluetooth speakers are a thing and some people listen to music trough their phone’s speaker. If I would think this way, I would seriously start to limit my creative toolset for a rather irrelevant reason. Even the good old fashion audio demonstration of feeding a mono signal into a stereo setup a flipping the polarity of one speaker - that’s an effect you could actually use right there. A creative possibility I would never ever bump up against if I was worrying about how the mix sounds in mono, and a pretty damn unique one at that. Yeah, the sound won’t even be there in mono, just like your side signal, but in stereo it can sound so god damn cool. It can even sound like the sound would be coming from behind you, depending on the characteristics of the particular sound and your room.

I think about mixing in terms of three dimensions: amplitudes (volumes), frequencies and spatial information. All of those need contrast and movement to make an interesting mix (well, IMO at least), and a lot of mixing problems can also be solved or helped in the domain of spatial information: say you have two instruments that are masking each other, but also have sounds that you really like and don’t want to mess up. Well, you could “mirror EQ” them, but then you would risk ruining the sounds (frequency domain). You could sidechain one to the other, but this might cause pumping artifacts (volume domain). This problem can also be solved by just hard panning the elements on opposite sides of the stereo image (spatial information domain). In stereo listening situations that might be all you need, though I usually do use some subtle frequency carving and pushing in those situations too, just to make the sounds even more distinctly separate. And yes, this is a generalisation and a simplification, these are obviously not the only choices. But my point is that, if I would be too focused on how the mix sounds in mono, I would never even come up against this as a potential solution. And hence I would be actually sacrificing my stereo mix, only for the sake of having a good mix in mono. And as far as I’m concerned, that’s a really bad deal.

But yeah, since spatial stuff is a big part of my music making and mixing (I’m into binaural stuff big time), I just simply don’t care about how my mixes sound in mono, at all. That being said I do have a dedicated, single driver speaker setup for mono checking (contradictory and paradoxical, I know  ). For me, in a music making/production context mono means summing everything into a single driver, not playing my 2.1 in mono. That’s because in the real world, if someone is listening to music in mono, it’s most likely trough a single driver. If I’m playing my 2.1 in mono, I’m still getting the speakers crossover filters in the signal path, and have multiple drivers pumping out different information. I’m probably thinking about this way too deeply, but as I said, I started thinking about this few years ago.

). For me, in a music making/production context mono means summing everything into a single driver, not playing my 2.1 in mono. That’s because in the real world, if someone is listening to music in mono, it’s most likely trough a single driver. If I’m playing my 2.1 in mono, I’m still getting the speakers crossover filters in the signal path, and have multiple drivers pumping out different information. I’m probably thinking about this way too deeply, but as I said, I started thinking about this few years ago.

Mixcube type speakers (single driver, full range speakers with closed enclosure) have many benefits, some people swear by them. I just use one as my second monitoring system.

Well, there’s many approaches. One thing I’ve learned is that thinking in terms of contrast is a really good approach for making your track feel wide/spatially open. I always have a stereo expander on my mixbus, and before drops or anything that requires addition of energy, I make the stereo image narrower, even totally mono if that serves the purpose. Then when the track needs the energy back, I just open the stereo field back to normal. You could also go to the opposite direction. Automation is the word of the day here.

You can also make a track have contrast in the spatial dimension by having a single element that is extremely different spatially. It could be your extremely wide reverb against a mono vocal, or it could be some effect in your side information. Or it could be a single mono element in otherwise a very wide mix. It all depends on the situation.

Then there’s the whole world of binaural stuff. That’s where you can go beyond the normal stereo image in headphone listening. Some binaural stuff is just straight up magical, it can feel like you’re really there. I have a couple of plugins and impulse responses for binaural stuff, and a pair of in-ear microphones for recording my own binaural foley/ambiance etc. The whole immersive audio in general is really worth looking into, if you haven’t already.

I think that with binaural, the same idea of contrast works really well: if you make everything binaural, it can get really messy, and my brain actually loses the pristine 3d image of the sound. Sort of similar to how making everything really wide can make your mix feel unfocused and lacking in the middle of the stereo field. You could either create the contrast with different elements playing simultaneously(e.g. one binaural element against many non-binaural elements), or with arrangement and structure (having one section that’s a binaural recording in the middle of a more “normal” mix can be super duper powerful and really grab the listener in).

You can also think of the binaural stuff as an alternative to panning. Now you don’t just have the axis of L to R, but a whole 360 degree space to place your sounds into. If memory serves me right, Rob Clouth has panned some elements in his tracks by putting a pair of in-ear microphones to his ears and moving around his studio while playing those elements trough the speakers. (I think it was the pad sound in “List of lists” by his alias “Vaetxh”).

In my experience, the binaural stuff works pretty damn well with stereo speakers too, it usually just feels like a weird spatial effect. But it can potentially cause phase issues if you’re worried about those.

Then there’s the whole rabbit hole of the much discussed mid-side trickery. A LOT of things can be done with that too. I usually have a mid-side “skew” utility on my mixbus too, so that I can blend between the mid and the side signals in a mix knob kinda fashion (0%=only mids, 50%=normal, 100%=only sides). Can be useful as a creative effect, or for checking your M/S separately.

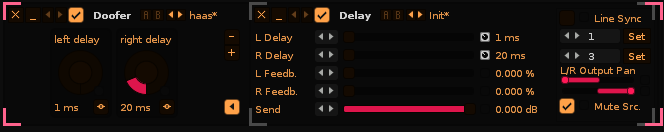

You could also for example take the old concept of ping pong delay, and make it “ping pong” between the mid and side signals (vs just L and R). It can sound really cool, kinda like the delay would be bouncing back and forth depthwise. Or you could try the same with Haas-effect: delaying the mid signal in relation to the side signal or vice versa.The mid side stuff in general is exceptional for creating a sense of depth. Boosting the side signal in reverbs is one way of potentially getting them wider. And mid-side EQ can do a huge amount of things for your stereo image. The possibilities with mid-side processing are endless. But while it’s one hellishly deep rabbit hole, it certainly is one worth looking into.