Wouldn’t it be nice if we could load the new modulation set as a metadevice ( insert effect) , this way we have acces to all fx parameter , ofcourse the metamodulation should be able to receive gate data

+1 for meta-modulators controlling params on other sets ![]()

my mind almost exploded thinking at it but should be cool to have ![]()

oh ok, then it is what I proposed during alpha stage and noone replied ![]()

Maybe because they can’t precisely imagine the benefits of it.

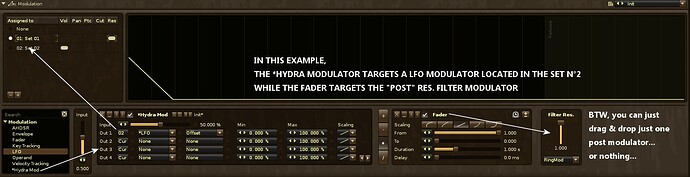

There is a meta device called Instr. Macro that is “a bridge” between track dsps and instruments modulations, but this is a one way bridge. I often asked myself “why” last days. I’m sure that the alpha testers and devteam thought about a 2 way bridge. Or a modulator that could control some parameters in the track dsps. The first idea that could explain why it has not been done is :

But today following a suggestion of Achenar, I think about another reason : difference between modulators architecture, and latency problems.

Architecture :

Let’s take modulations from the modulation set, in one hand, and meta-devices in the other hand (that are also modulators but focused on track dsps) are not exactly and technically the same, even if, visually, they follow nearly the same look and feel. For example, building a chain of modulations in a modulation set results in a “instant” visualisation of what WILL happen. The modulation visualisation is not only showing what’s going on “in realtime”, it’s also showing “the future”, for example, it shows how “the pitch” will behave during 2 seconds, if a note is triggered and if the associated sample is played… And this is true that, there is no visualisation in the renoise track dsp layer that “automatically draw” or “predict” the future aspect of a random lfo DSP. What is somehow possible in the instruments modulator : a “random LFO” can be refreshed internally on a 20Hz basis. So I suspect - and maybe someone in the devteam could explain it better or precise things on this point - that each sample modulation follows its own timeline, that it has its “own timer”, and that each sample modulation is pre-computed and stored sperarately (requiring a dedicated memory and buffer), and that each modulation is “refreshed” on demand, most of the time, and when it has to be refreshed in realtime, it follows a maximum speed refresh of 20Hz. (? well I suppose… it’s hypothetic). It looks like a kind of mini automation curve generator for each sample. For this reason, storing just one instrument modulation curve for a sample, would also imply to store in memory all the subsequent curves logically attached. Imagine now that you work with polyphonic instruments that contains 50 samples each one triggering the behaviour of each other : it would dramatically increase the memory required in the playback buffer, the latency, especially if you link too many modulations between themselves. Imagine that renoise has “send” modulator to “send” the result of a modulation set to another modulation set and that an instrument has 50 samples with 50 send modulator devices. The cpu time required by this simple function would require to refresh 50 curves alltogether in realtime. What probably leads to latency problems.

Latency :

It’s also the same thing with another aspect of renoise routings : concerning the Track Groups. You can’t nest more than 6 levels of track groups. Why, because routings, like everything, need time, cpu, buffers. The more you nest tracks inside tracks, the more sounds follow a longuer path in the software. And if it’s too long, the whole playback routine will produce too much bufferings and so : a crazy latency. Same thing concerning Doofers ! Didn’t you see that once a DSP chain is dooferized : you can’t modulate / automate anything but the doofer macro knobs ? All the subsequent parameters encapsulated inside the Doofer are not available anymore for external meta devices. That is a willfull limitation. It’s also obvious that devs have limited the usage of instruments with FX chains in the pattern editor, to 1 track at a given time : for the same reasons : decreasing the risk of latency and CPU usage optimizations.

Solution :

So : saying that, my idea follows. Introducing willfull limitations. For example limiting the number of possible cross modulation sets / fx chains, to… : 8 ! Limiting “the path” of cross modulation sets to a vertical path for example : a cross modulation set can only modulate a “following set” and not a “previous set” (abit like the signal follower behaves in the track dsps, you can’t follow something that happens “after” your own behaviour, it’s a tempral paradox that renoise has worked out by introducing a “rule”). For example, if you can’t use more that “8 macros” between tracks dsp and instruments modulators, the same thing should be applied on the other side : you can’t modulate more than 8 FX chains parameters for the same instrument.

I know that while introducing a bit of rules in a modulation system logically decreases its own flexibility and consequently, the creativity we can expect from it. But there are probably fair compromises can to be found.