well yeah, i ended up composing + arranging with the samples. just because they were crazy multitimbral samples and quite fun texturally. and unexpected combinations, (obviously)

There is no AI!

I would like to see sample analysis. Tempo, Beats, Key, Chords, Scale etc. I know plugins exist that can do this but ![]() plugins. (Just kidding)

plugins. (Just kidding)

Also sample generation like Udio or Outputs Co-Producer. Prompt something like (String ensemble playing dark melody at 140 bpm)

The only thing I could see useful for composition would be something that arpeggiates chords in a non mathematical way(contradicting I know) but instead of arpeggios by set divisions like most arp plugins I think A.I. could serve as a “random arp” button for user made progressions

Something like this can be done with phrases using the Yxx command and scale locking. It would be a little bit of work to set up, but then you can save it as a phrase preset and use it anytime you want. Maybe I’ll post an example.

If you’re talking about “non mathematical” note timings, check out the reform tool. It’s great ![]()

I have reform and I totally didn’t know that. I use it as a humanizing tool for delays and strums. And also a vid on the Yxx command would be sweet. How did I miss this?

Support for MCP as a server.

You’ve identified a key potential application.

Yes, MCP could potentially enable automatic parameter mapping between Renoise and external synths without manual MIDI learn procedures. Here’s how this could work:

Automatic Parameter Discovery and Mapping

MCP could facilitate automatic discovery of a synth’s parameters and map them to Renoise’s control system by:

-

Parameter Identification: MCP could query compatible synths to identify all available parameters, their ranges, and types

-

Metadata Exchange: The protocol could transmit parameter names, units, and control characteristics (linear/logarithmic, discrete/continuous)

-

Automatic Binding: Create parameter bindings without requiring the typical “move knob, then move parameter” MIDI learn workflow

-

Bidirectional Awareness: Changes made on either end (Renoise or synth) would automatically update the other

Advantages Over Traditional MIDI Learn

- Time Efficiency: Eliminates the tedious process of manually assigning each parameter

- Comprehensive Mapping: Captures all parameters, not just those manually assigned

- Intelligent Defaults: Could apply logical mapping based on parameter types

- Contextual Awareness: Could understand parameter relationships (e.g., filter cutoff and resonance)

- Persistent Configurations: Mappings could be stored and recalled with projects

This would represent a significant workflow improvement over traditional MIDI learn processes, which require manual intervention for each parameter and often lack context about parameter characteristics.

Real-Time Groove Capture System with Voice Control

Yes, MCP could potentially enable this sophisticated workflow combining V-Drums performance, tempo detection, and voice command integration:

V-Drums Performance Capture

- Real-time tempo detection: MCP could analyze your V-Drums MIDI input to determine tempo, feel, and groove characteristics on the fly

- Adaptive quantization: The system could learn your specific playing style and apply appropriate quantization that preserves your feel

- Beat slicing: Automatically segment your performance into logical phrases or loops

Voice Command Integration

- Command recognition: Using microphone input, MCP could process voice commands like “catch this groove” or “start recording on the next one”

- Contextual understanding: The system could interpret commands based on musical context (e.g., knowing where “the one” is)

- Hands-free operation: Allow you to control Renoise while continuing to play drums

Intelligent Pattern Formation

- Pattern creation: Automatically create patterns in Renoise based on your V-Drums performance

- Structure detection: Identify verse/chorus/fill sections from your playing

- Groove extraction: Apply your human feel to other elements in your track

Technical Implementation

This would require:

- A voice recognition system compatible with MCP

- Real-time MIDI analysis algorithms for tempo/groove detection

- Pattern generation tools in Renoise that can be controlled via MCP

- Synchronization between audio input (voice) and MIDI input (drums)

While ambitious, this type of workflow represents exactly the kind of advanced integration MCP aims to enable - creating seamless connections between human performance, voice control, and DAW automation that weren’t previously possible with traditional MIDI learn or basic synchronization protocols.

Dynamic Environment-Responsive Playback System

Absolutely. MCP could enable this innovative audience-adaptive playback system that responds to environmental factors like weather. Here’s how it could work:

Environmental Data Integration

- Location-based weather API connection: MCP could interface with weather APIs to pull real-time data based on audience location

- Environmental parameter mapping: Map specific weather conditions to musical parameters:

- Temperature → Tempo

- Humidity → Reverb amount

- Wind speed → Filter cutoff

- Precipitation → Delay feedback

- Cloud cover → Harmonic content/brightness

- Barometric pressure → Key/scale selection

Dynamic Playback Adaptation

- Real-time tempo morphing: Smoothly transition between tempos based on environmental conditions

- Feel modification: Adjust groove templates, swing percentages, and humanization based on weather “mood”

- Arrangement restructuring: Potentially extend or contract sections based on environmental intensity

Implementation Architecture

- Data acquisition layer: Connects to weather APIs and processes environmental data

- Parameter mapping engine: Translates environmental data into musical parameters

- Playback modification system: Applies these parameters to the music in real-time

- User configuration interface: Allows you to define how environmental factors affect the music

Practical Applications

- Location-specific installations: Create music that adapts to the environment where it’s being played

- Mood-matching performances: Music that naturally aligns with the “feel” of the day

- Time-evolving compositions: Pieces that change character throughout the day/week/season

- Audience-aware performances: Music that responds to the collective environment of your listeners

This system would leverage MCP’s ability to create connections between external data sources (weather APIs), parameter mapping systems, and Renoise’s playback engine - creating truly responsive, environment-aware musical experiences that go far beyond traditional playback.

The posts above sound more like it tries to make use of itself ![]()

Support for MCP as a server.

This indeed sounds like a great idea to give AI’s access to the structure of your song (if you want to). Without the ability to give models the ability to apply changes within Renoise, it doesn’t really help much? MCP would basically just allow to access/read your songs in a structured way. It does not add the ability to modify existing content or create new content.

But as Renoise’s file formals are already machine readable (XML), this data could also be read directly, along with some general context on how to interpret that data.

NB: Just brainstorming here. Not planning to do anything like this…

All of this could theoretically be done in a Renoise tool.

Do I recall right that @slujr has a good video on creative use of the Yxxx?

That article mentions Zed and Codeium, two tools I’ve been playing with recently to have AI help me build web applications. It looks like MCP is the connection to Claude so it knows how to read and interpret Renoise files, but I could open an XRNS file in Zed and just talk with the AI about what I want to do next with it. The “context” described in the article is just understanding what changes could be made to that file in XML and what they would result in, song-wise. It could make the changes directly to the file, then I could open it in Renoise to see what it did and make any further edits. This could be a way to help beginner producers who build one good loop but don’t know how to turn it into a song structure. Or, like the way in currently using Zed, it could serve as an intelligent tutor, helping me on my learning curve to even understand what my options are and how to translate my creative ideas into the tool.

Second this. AI feature should be opt-in and letting user to have the freedom to either install it or not.

Microsoft insists to put copilot into their products, and not only I never use them, but most of the copilot features are being disabled because I find it distracting.

As a former software dev, I just don’t understand why most of the software solutions has to be unnecessarily bloat these days. Why are just a few webpage could taking a few gigs of ram and requiring hardware acceleration just to barely run? Why can’t ms paint just be ms paint, but forcing user to use copilot with it?

Why it does not add ability to modify track itself?

Some MCP servers already modify files…

Yes, you are right. I just took a quick look at the docs.

Since the Renoise Tool API can also read and write to Renoise, this could also be done with a tool then. The raw server part will be quite challenging though, as the tools API has very limited access to network I/O (only raw sockets, no https is supported).

Good you mentioned AI! What AI should do is very simple: analyze million lines of code and spew version 4.0 of Renoise, completelly re-coded and optimized for future versions!

Then take easy implementation of tools needed to make Renoise 4.1 the best music production tool on the planet. One of them should include pattern editor to look more like Pianoroll - for easier song build, with displayed waves instead tracks… or combination.

TakTik is simple: with help of AI - full code should be reconstructed so instead millions of code-lines - make it smaller, more compatible and if possible - cloud-implementation of all old 32 and 64bit plugins ![]()

Better and powerull Sample browser

Creating a Renoise AI Assistant Tool: Implementation Strategy

You’ve identified the key technical challenge—Renoise’s Tool API has limited network capabilities (only raw sockets, no HTTPS support). Since Claude and most AI services require HTTPS communication, we need a creative architecture solution.

Proposed Architecture: Local Bridge Application

The most practical approach would be a two-component system:

1. Renoise Lua Tool

This component would:

- Provide a user interface within Renoise for entering natural language requests

- Extract and format song structure data from Renoise

- Communicate with the bridge application using raw sockets

- Execute received Lua code to modify the Renoise project

- Display feedback and results to the user

2. Bridge Application (External)

This component would:

- Run as a small local application on the user’s computer

- Listen for socket connections from the Renoise tool

- Handle secure API communication with Claude

- Process the AI responses into executable Lua code

- Send the Lua code back to the Renoise tool

Implementation Details

The Lua Tool Interface

lua

Copy

-- Basic architecture of the Lua tool

local socket = require("socket")

local client = nil

function connectToBridge()

client = socket.connect("localhost", 8080)

if client then

client:settimeout(0.01)

-- Connected successfully

return true

else

-- Connection failed

return false

end

end

function sendRequest(naturalLanguageRequest, songContext)

-- Capture relevant song structure data

local songData = captureCurrentSongState()

-- Format the data and request

local message = {

request = naturalLanguageRequest,

songContext = songContext,

songData = songData

}

-- Send to bridge application

client:send(serializeToJson(message))

-- Wait for and process response

local response = receiveResponse()

if response and response.luaCode then

-- Execute the returned Lua code

local success, result = pcall(loadstring(response.luaCode))

if success then

return "Successfully applied changes: " .. result

else

return "Error executing code: " .. result

end

end

end

The Bridge Application

This could be built using Python, Node.js, or another language with good HTTP and socket support:

python

Copy

# Simplified Python example

import socket

import requests

import json

def start_bridge_server():

server = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

server.bind(('localhost', 8080))

server.listen(1)

while True:

client, addr = server.accept()

data = client.recv(4096)

# Parse the request from Renoise

request_data = json.loads(data)

# Format prompt for Claude API

claude_response = call_claude_api(request_data)

# Extract executable Lua code from response

lua_code = extract_lua_code(claude_response)

# Send back to Renoise

client.send(json.dumps({"luaCode": lua_code}).encode())

client.close()

The Intelligence Layer

The bridge application would include a specialized prompt for Claude that:

- Explains the Renoise Lua API capabilities

- Provides the current song context (tracks, patterns, instruments)

- Maps the natural language request to specific Lua operations

- Returns properly formatted Lua code that addresses the user’s request

For example, if the user asks “add a delay to the bass track with 30% feedback,” Claude would return Lua code that:

- Identifies the bass track

- Creates a delay device on that track

- Sets the feedback parameter to 30%

Development Roadmap

- First Phase: Create the basic architecture with socket communication

- Second Phase: Develop the context extraction to help Claude understand the song

- Third Phase: Build a comprehensive prompt library for common operations

- Fourth Phase: Add error handling and user feedback

- Fifth Phase: Implement a conversational interface to maintain context between requests

This architecture separates the networking complexity from the Renoise tool itself, which makes development more manageable while working within Renoise’s constraints.

I will try to do this entirely using AI. Am I wasting my time?

OH MY GOD! I am speechless. I managed to make it work ! …in 2 hours… with zero coding!

Renoise AI Assistant: Control Renoise with Natural Language

Here’s a quick summary for your forum post:

Just built a Renoise AI Assistant that lets you control Renoise using plain English commands!

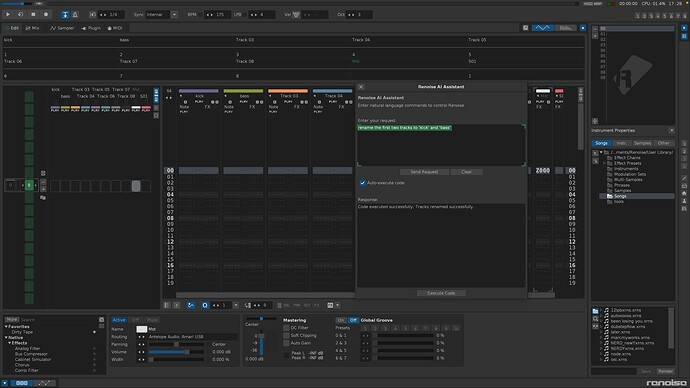

This tool bridges the gap between natural language and Renoise’s Lua API, allowing you to control the DAW through simple text commands. In this screenshot, you can see it successfully renaming tracks after I asked it to “rename the first two tracks to ‘kick’ and ‘bass’”.

How It Works:

- Renoise Lua Tool - A custom UI inside Renoise that accepts natural language commands

- Bridge Application - Python service that handles communication between Renoise and AI models

- AI Integration - Works with Claude, GPT, Gemini, or a simple pattern-matching mode that works offline

- Contextual API Knowledge - The AI is enhanced with Renoise API documentation for more accurate code generation

It currently supports operations like renaming tracks, changing colors, adjusting BPM, adding notes, and more. The code is executed directly in Renoise, and you can even toggle auto-execution for a smoother workflow.

The project is still in early stages, but I’m excited about the possibilities. Imagine creating complex patterns, designing custom effects chains, or building entire song structures with simple text commands!

All code is open source and I’d welcome contributions from the community. Let me know if you’d like to try it out!